AI Model Design

Our Values: Users should set targets for AI, these shall take priority over academic and industrial priorities.

Our mission is to enable customers to design ML and AI that:

1. Enables a tenfold improvement in a defined user experience

2. Protects the privacy and rights of humans.

3. Mitigates technical and clinical risks

4. Can be freely released when it is in the public interest

We maintain strong co-development partnerships with:

Our mission is to enable customers to design ML and AI that:

1. Enables a tenfold improvement in a defined user experience

2. Protects the privacy and rights of humans.

3. Mitigates technical and clinical risks

4. Can be freely released when it is in the public interest

We maintain strong co-development partnerships with:

The 'DAR Design Framework': A Unique Ethical AI Toolset

We encourage the use of these privacy protecting development tools:

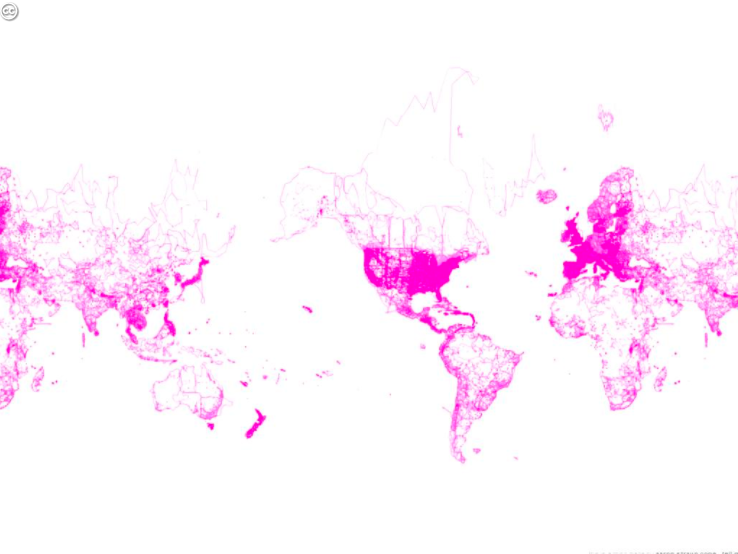

These tools enable AI powered models to be deployed globally

- PRIDAR: a method of prioritising the ethical and safe development of AI solutions

- VOICEDAR AND GAPDAR: enable users to draw an inference from human-to-human and human-to-computer interaction

- WHATDAR: enables users to gather insight from unstructured audio and text conversations

- NUDGESHARE: an ethical method of gathering self reported data from the public

These tools enable AI powered models to be deployed globally

Our Professional Practice

We encourage customers to commit to

User Centred Design:

- We help customers understand their role within the AI funding, users and supplier ecosystem

- Support users and subject matter experts committed to the development of ethical AI: via user group moderation and insight development. To join a group, or to form a new group contact us

- We are committed to continuously gathering and acting upon model feedback

Privacy by Design

- By the implementation of good practice as defined by the ICO

- Designing systems that do not gather personally identifiable data

- Designing systems that put users in control of the data they share

- Enabling CarefulAI System and Model users to share Data with others using informed explicit consent

Managing Risk in Design

- PRIDAR: a method for prioritising risk associated individual AI driven solutions

- VALDAR: an approach to monitoring the value and safety of AI

Managing Compliance by Design:

For example in in Healthcare:

- BSI 30440

- NICE, MHRA, ICO, MDR, EU and FDA Regulations

- DCB0129/160

- ISO 9001, 14971, 13485, 27001, 27701

- IEC 62304

- Purchasing standards e.g. DTAC

- Managing the data Pipeline and Production using LDP & Federated ML to GDS Standards

- Audit data inputs and outputs and look for subgroup bias

- Apply simple design & sanity & fairness checks on external data sources

- Use adaptive stress techniques to look for model weaknesses e.g. Adastress

- Employ interpretable models in high risk environments

- Peer review training scripts

- Avoid external dependencies

- Automate feature, parameter and model selection and configuration

- Ensure all features have an owner and a well documented rationale

- Version models and data sources

- Support Model Card Reporting and AI Assurance e.g. MCT , CAF

- Using 'Red Teams' and GANs to identify system weaknesses e.g. Infodemics

Be transparent about deployment stack:

- Commit to in-country and partner standards e.g. EU , Google , Microsoft, IBM

- Adapt Tooling to the User Needs

- Building Verifiable Data Logs e.g. Trillian

- Effective Code Deployment i.e. Travis

- Automated Regression Tests i.e. JUnit, Python

- Virtualise model deployment utilising LDP and Federated Learning

- Automating Model Deployment i.e. Kubeflow and Docker

- Continuously measure model performance

Be transparent in how models are licensed

- With the agreement of customers, Generalised ML / AI models derived from AI for Good work are made available gratis to UK customers (see Terms of Use)

- Models that cannot be released freely are available as supported products for specific user cases under licence, for which there is a monthly fee. The terms under which models are released are shown in our Licence Builder. The licence builder can be used to propose the terms under which you wish to apply a model.