Careful AI

CarefulAI was formed to satisfy the growing demand for ethical AI and behavioural science services in the health and social care sector.

Our team uniquely couples staff with 20 years experience of user led co-production with a growing ethical AI toolset:

Our Approach to AI Design Management:

Our AI Production tools and APIs for classification and prediction:

Our customer base is the health and social care ecosystem. This system is a highly regulated environment. We, our suppliers, partners and customers need to evidence compliance with, or who show they are working towards the following on all developments:

Our team uniquely couples staff with 20 years experience of user led co-production with a growing ethical AI toolset:

Our Approach to AI Design Management:

- PRIDAR: a method for prioritising risk associated individual AI driven care solutions

- PATHDAR: an approach for assessing the risks associated with AI in a pathway

- VALDAR: an approach to monitoring the value and safety of AI in a pathway

Our AI Production tools and APIs for classification and prediction:

- GAPDAR: differential privacy data modelling using local differential privacy

- BOTDAR: a framework for the swift creation of Text/SMS chatbots

- SICDAR: screen for language associated with negative thoughts

- COVDAR: identification of breathlessness in speech

- EMPDAR: affective engagement during telephone/web meetings

- FAQDAR: topic modelling from recorded audio

Our customer base is the health and social care ecosystem. This system is a highly regulated environment. We, our suppliers, partners and customers need to evidence compliance with, or who show they are working towards the following on all developments:

- NICE, MHRA, ICO, EU and FDA Regulations

- DTB0129/160

- ISO 9001, 13485, 27001, 27701

- Purchasing standards e.g. DTAC

Our commitment to Ethical AI & Assurance

Deploying Professional Practice

- To help customers understand their role within the AI funding, users and supplier ecosystem

- Assisting customers to prioritise development of AI

- We support users and subject matter experts committed to the development of ethical AI: via user group moderation and insight development. To join a group, or to form a new group contact us

- We are committed to continuously gathering and acting upon user experience feedback

- Each member of staff delivers 75 days pro-bono work on social good initiatives e.g. supporting open source development projects in the IXN for the NHS

- We are committed to in-country and in partner AI standards e.g. EU , Google , Microsoft, IBM

- Contributing to AI Best Practice Frameworks and Standards e.g. ICO

- Leading Model Card Reporting within Pathways, and AI Assurance e.g. MCT , CAF

- Using 'Red Teams' and GANs to identify system weaknesses e.g. Infodemics

- Using adaptive stress technique on high risk systems e.g. Adastress

- Building Verifiable Data Logs e.g. Trillian

- Support tool developments e.g. Tooling

Applying standards and testing:

- Managing the data Pipeline and Production using LDP & Federated ML to TSSM to GDS Standards

- Code Deployment i.e. Travis

- Automated regression Tests i.e. JUnit, Python

- Model and Data Versioning for Design and Compliance e.g. GDP

- Automating Model Deployment i.e. Kubeflow and Docker

Applying good Practice

- Be led and auditable by end users: specifically clinucians and the public

- Employ interpretable models by default

- Simple design & sanity & fairness checks on external data sources

- Ensure all features have an owner, are well documented rationale and automate when possible

- Peer review training scripts

- Avoid external dependencies

- Virtualise model deployment utilising LDP and Federated Learning

- Automate parameter and model selection and configuration

- Continuously measure model performance

- Audit data inputs and outputs and look for subgroup bias

- Version models and data sources

Our Business Model

Our business model is to charge for our services. We do not sell our ethical AI tool set. We use it to enable the health and social care sector ecosystem launch ethical data driven solutions effectively and safely. Examples of care services we have co-developed within the NHS ecosystem include:

- Social isolation support e.g CarerCare using GAPDAR's federated learning model & nudge model

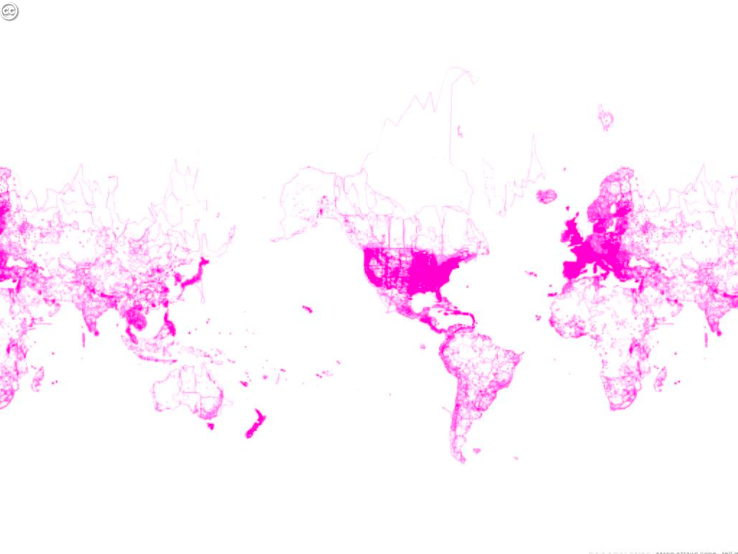

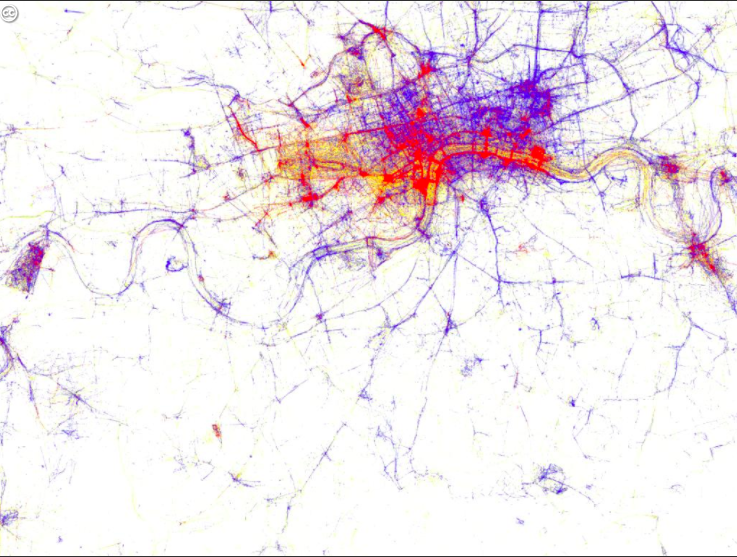

- Public health monitoring e.g. Wellbeing Maps using GAPDAR's local differential privacy model

- Triage chatbots e.g. The Mental Health Chatbot using the BOTDAR framework and the SICDAR api

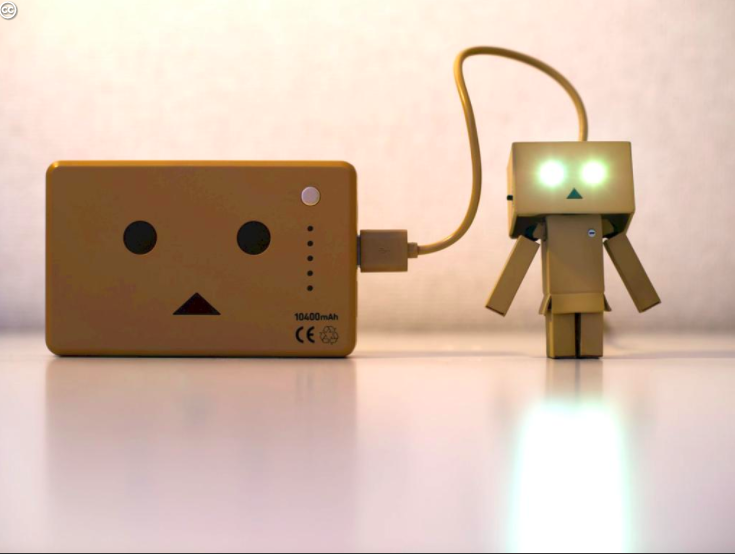

We measure success by the affect we have. For example we aim to turn this...

Into this...

We start by engaging users, and we do this very well...

Then we engage the people users trust...

The great and the good share their insight & publications with us...

We gather global data and insights on the issues arising...

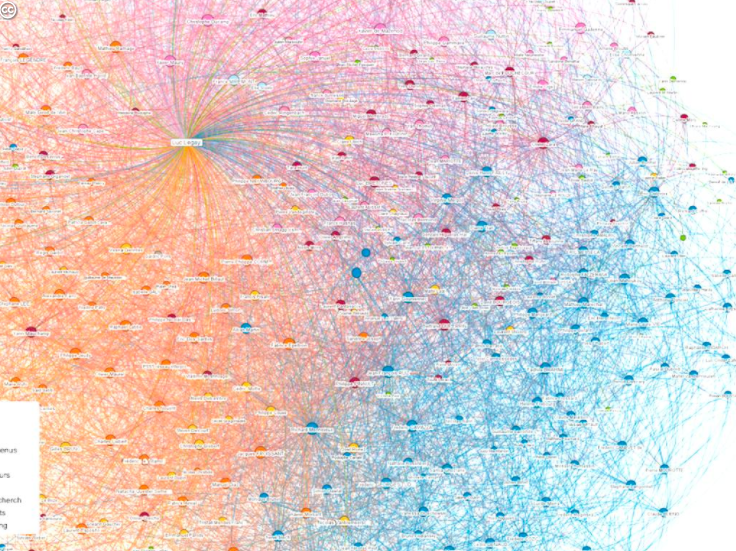

We review the relationships between the models

We develop new models and systems that aim to improve the lives of specific populations

We look for variances that will effect specific user experiences

We design models so that a user can control how data and insights are used....

We enable users to design experiences that they value...

We then power systems to deliver the desired user experience.

This website uses marketing and tracking technologies. Opting out of this will opt you out of all cookies, except for those needed to run the website. Note that some products may not work as well without tracking cookies.

Opt Out of Cookies