Dear Mr Stubbs

Dear Stubbsie

I know this is a strange letter. But stick with me. I need to write something to you.

It relates to how AI could be implemented in the NHS. I would trust AI in your hands. It is my view that it should be led by users, and the public. And by users I mean people like you. A Paramedic and Ambulance driver.

I will need to explain some stuff about AI in the NHS. Concepts you have come across like risk mitigation and barriers to change.

Then I am going to the crux: the desperate need to get beyond the AI hype. To understand that we are really talking about in AI are computerised advanced guessing systems.

The success or failure of AI in the NHS is going to be dependent upon people who have empathy and curiosity. People who will lead design, development and deployment of AI, not wait till it is presented to them. People who prefer action rather than talk. People who care about people, not kudos.

People like you..

I know this is a strange letter. But stick with me. I need to write something to you.

It relates to how AI could be implemented in the NHS. I would trust AI in your hands. It is my view that it should be led by users, and the public. And by users I mean people like you. A Paramedic and Ambulance driver.

I will need to explain some stuff about AI in the NHS. Concepts you have come across like risk mitigation and barriers to change.

Then I am going to the crux: the desperate need to get beyond the AI hype. To understand that we are really talking about in AI are computerised advanced guessing systems.

The success or failure of AI in the NHS is going to be dependent upon people who have empathy and curiosity. People who will lead design, development and deployment of AI, not wait till it is presented to them. People who prefer action rather than talk. People who care about people, not kudos.

People like you..

AI in the NHS

AI is going to change the NHS. But the acceptable level of change depends on ones risk perspective, motivations and resources. It is much like making a change to a moving ambulance, when the change can be a big as replacing the paramedics with robots, or as little as washing the vehicle. It is possible. But is going to be hard: because who wants to slow down an ambulance.

Risk Mitigation

Using the Ambulance analogy. There are many changes one could make to an ambulance. Some are very risky, like swapping a paramedic with a robot. Swapping an ambulance with a new type of ambulance is less risky. Cleaning and ambulance has even less risk. This said. You would do none of this if the ambulance was in motion.

If you are going to make a change to an ambulance. It is probably best done in a workshop. Us AI folk have a fancy name for them. We call them sandboxes. Places were you can tinker, and if you break something it does not really matter.

There are certain people who need to be confident that a change to an ambulance is safe. They usually have a checklist and a lists of hurdles suppliers of kit need to comply with. Us AI folk have the similar people. We call them Clinical Safety Officers, who work to a standard. Their checklists are called DCB's, DHT and DTAC.

Much like with an ambulance where you have a checklist to sign an ambulance out of the workshop. Us AI folk have similar checklists we call them software licences and agreements like AGPL and Lambert agreements (when none NHS folk use our stuff).

It is a really bad idea if you tinker with all your ambulances at the same time in your workshop, so you have to have some way of choosing what to tinker with and why, your job list. We have the same kind of list. But we have a posh name for it. We call it a portfolio, which we manage.

More on the AI world themes

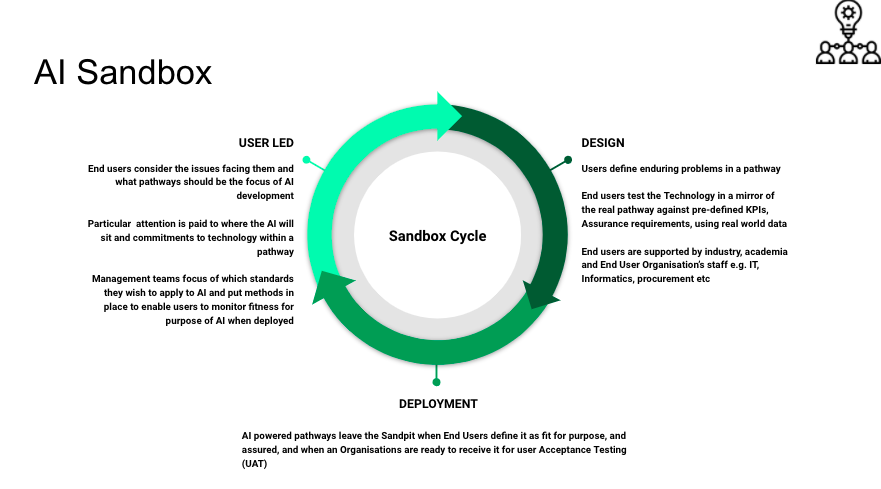

AI Sandboxes

These are safe places where you play with AI to see if and how it can be broken with real data and real users. It is much like when somebody gives the Ambulance service a new vehicle to test before it buys one. Nothing survives contact with real people and data, and ideally these sandboxes should have both. Like with an ambulance. if you can get access to the people who specify, develop, test, make. supply and buy them you can influence what is made. The same can be said with AI. The most effective scenario is that local health board management, clinical and IT teams set up their own AI sandboxes with academics and local firms to drive AI developments.

If you were not involved in the design of an ambulance you end up using, you would be circumspect about that ambulances design. So it is with AI. AI developments should be clinically led, with pre-defined key performance indicators (KPIs). These developments would have support from end user to board level. They would also have Intellectual Property (IP) and Information Governance (IG) agreements in place and a model explaining how data is shared in line with Information Commissioners Office (ICO) guidelines. Not having these agreements and models in place would be like saying to you. Thanks for your help designing this fab new ambulance. You and your health board are not going to receive a penny. Copyright of your ideas is not going to be attributed to you / and your health board (which is against the law)

A visualisation of the processes in an AI Sandbox is shown below. I hope this helps

If you are going to make a change to an ambulance. It is probably best done in a workshop. Us AI folk have a fancy name for them. We call them sandboxes. Places were you can tinker, and if you break something it does not really matter.

There are certain people who need to be confident that a change to an ambulance is safe. They usually have a checklist and a lists of hurdles suppliers of kit need to comply with. Us AI folk have the similar people. We call them Clinical Safety Officers, who work to a standard. Their checklists are called DCB's, DHT and DTAC.

Much like with an ambulance where you have a checklist to sign an ambulance out of the workshop. Us AI folk have similar checklists we call them software licences and agreements like AGPL and Lambert agreements (when none NHS folk use our stuff).

It is a really bad idea if you tinker with all your ambulances at the same time in your workshop, so you have to have some way of choosing what to tinker with and why, your job list. We have the same kind of list. But we have a posh name for it. We call it a portfolio, which we manage.

More on the AI world themes

AI Sandboxes

These are safe places where you play with AI to see if and how it can be broken with real data and real users. It is much like when somebody gives the Ambulance service a new vehicle to test before it buys one. Nothing survives contact with real people and data, and ideally these sandboxes should have both. Like with an ambulance. if you can get access to the people who specify, develop, test, make. supply and buy them you can influence what is made. The same can be said with AI. The most effective scenario is that local health board management, clinical and IT teams set up their own AI sandboxes with academics and local firms to drive AI developments.

If you were not involved in the design of an ambulance you end up using, you would be circumspect about that ambulances design. So it is with AI. AI developments should be clinically led, with pre-defined key performance indicators (KPIs). These developments would have support from end user to board level. They would also have Intellectual Property (IP) and Information Governance (IG) agreements in place and a model explaining how data is shared in line with Information Commissioners Office (ICO) guidelines. Not having these agreements and models in place would be like saying to you. Thanks for your help designing this fab new ambulance. You and your health board are not going to receive a penny. Copyright of your ideas is not going to be attributed to you / and your health board (which is against the law)

A visualisation of the processes in an AI Sandbox is shown below. I hope this helps

AI Standards

Imagine you were designing a new type of vehicle, that had never been tested. You would probably end up developing your own standards, or apply existing ones to this new vehicle when appropriate. This is akin to what is happening at the moment is AI. AI compliance is a new and changing field. So standards are changing rapidly. Organisations like the government, MHRA, NICE have different evidence criteria and standards and they are trying to decide what fits.

There is some good news. A standard that directly affects AI are the DCB 0129 / 160 standards. These require that all IT projects have a trained clinical safety officer in place. Such officers control the creation and release of a clinical risk / hazard and mitigation log which needs to be made available to users. The are bit like the manual you get when you buy a vehicle showing you waht to do when things go wrong. Like a tyre puncture.

A similar standard is NICE Digital Health Technology Evidence Framework. (NICE DHT). In essence it says that for a particular pathway one should ensure evidence exists about a particular digital health technology i.e. AI. The level of evidence relates to whether AI aims to have a clinical outcome or not. There are three Tiers in NICE DHT. The lowest tier (Tier 1) has evidence requirements that can take up to 6 months to gather. The highest (Tier 3) can take between 2 and 5 years gather. These standards are comparable to the standards the government places on the medicine provider have to meet. The main difference is that in practice NICE Tier 1 evidence can be gathered relatively simply, however e a collaboration between the NHS, Academia and Industry.

Other relevant standards are covered in the Governments Digital Technology Assessment Criteria (DTAC) this operates a bit like a MOT testing schedule. Unlike a MOT, DTAC is applicable for new AI as well as old AI.

A Commercial Strategy

The commercial strategy for selling / buying AI should depend upon your power. If you are involved in designing and developing AI, then you are in a position of power. You can negotiate a good deal. If you wait till after the AI is developed then your power is reduced. Using the ambulance design analogy. If it is your design, built with your parts then you would negotiate a good deal and licence its use. So it is with AI. But in this case the parts are data you share to create the AI, and your time used to interpret the insights and predictions the AI makes.

It is appropriate to suggest that NICE DHT Tier 1 AI be released under a that does not preclude or prejudice its use e.g. MIT licence which enables the private sector to embody the AI into their solutions and sell it, or others like AGPL that do not allow re-sale. This is a bit like allowing another Ambulance service to use your ambulance design, but precluding them from selling it back to you.

NICE DHT Tier 2-3 AI is best disseminated under a non-exclusive Lambert agreement: because such AI projects often involve partnerships with academia and industry. Lambert Agreements are template documents freely available from the Intellectual Property offices web site. This is much like saying, you can sell the ambulance design but my health board gets some revenue back from each deal.

Over and above the clinical risk of bringing AI into use there is a cost risk. The principle cost risk being the time and effort associated with bringing a NICE Tier 1-3 solution to bare within a pathway. For a NICE DHT Tier 1 service this can be around £0.5 million, for a tier 2-3 product this could be between £2-5 million.

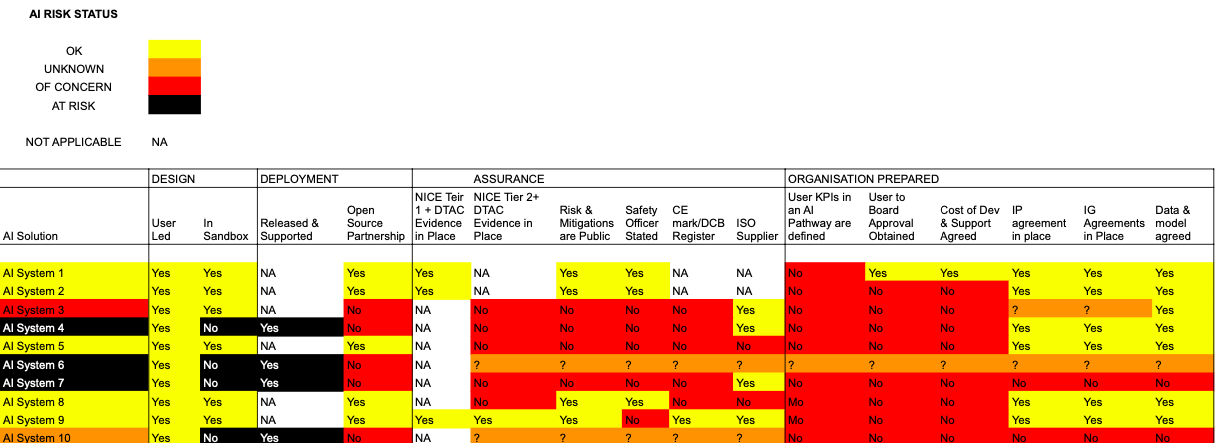

AI Portfolio Monitoring

AI Portfolio Monitoring is in essence a job checklist. It guides you to decide what jobs to do first. It is a good idea to have a set of criterial for assessing the risk of an ambulance failing. So it is with AI. Below is the CarefulAI Criteria we use with NHS customers, to prioritise AI jobs. The riskier the status, the more important it is to address the AI issue. A direct analogy is a MOT. If your vehicle is at risk. It will fail its MOT. If a vehicle has some concerns to a MOT assessor, they will list a series of advisories in your MOT assessment. With the CarefulAI Criteria the main difference is that customers and staff are encouraged to report an AI system's risk status. This is akin to asking an ambulance driver or paramedic to assess their vehicle for its potential MOT status.

The CarefulAI Criteria are shown below with real examples (and AI System names anonymised)

Imagine you were designing a new type of vehicle, that had never been tested. You would probably end up developing your own standards, or apply existing ones to this new vehicle when appropriate. This is akin to what is happening at the moment is AI. AI compliance is a new and changing field. So standards are changing rapidly. Organisations like the government, MHRA, NICE have different evidence criteria and standards and they are trying to decide what fits.

There is some good news. A standard that directly affects AI are the DCB 0129 / 160 standards. These require that all IT projects have a trained clinical safety officer in place. Such officers control the creation and release of a clinical risk / hazard and mitigation log which needs to be made available to users. The are bit like the manual you get when you buy a vehicle showing you waht to do when things go wrong. Like a tyre puncture.

A similar standard is NICE Digital Health Technology Evidence Framework. (NICE DHT). In essence it says that for a particular pathway one should ensure evidence exists about a particular digital health technology i.e. AI. The level of evidence relates to whether AI aims to have a clinical outcome or not. There are three Tiers in NICE DHT. The lowest tier (Tier 1) has evidence requirements that can take up to 6 months to gather. The highest (Tier 3) can take between 2 and 5 years gather. These standards are comparable to the standards the government places on the medicine provider have to meet. The main difference is that in practice NICE Tier 1 evidence can be gathered relatively simply, however e a collaboration between the NHS, Academia and Industry.

Other relevant standards are covered in the Governments Digital Technology Assessment Criteria (DTAC) this operates a bit like a MOT testing schedule. Unlike a MOT, DTAC is applicable for new AI as well as old AI.

A Commercial Strategy

The commercial strategy for selling / buying AI should depend upon your power. If you are involved in designing and developing AI, then you are in a position of power. You can negotiate a good deal. If you wait till after the AI is developed then your power is reduced. Using the ambulance design analogy. If it is your design, built with your parts then you would negotiate a good deal and licence its use. So it is with AI. But in this case the parts are data you share to create the AI, and your time used to interpret the insights and predictions the AI makes.

It is appropriate to suggest that NICE DHT Tier 1 AI be released under a that does not preclude or prejudice its use e.g. MIT licence which enables the private sector to embody the AI into their solutions and sell it, or others like AGPL that do not allow re-sale. This is a bit like allowing another Ambulance service to use your ambulance design, but precluding them from selling it back to you.

NICE DHT Tier 2-3 AI is best disseminated under a non-exclusive Lambert agreement: because such AI projects often involve partnerships with academia and industry. Lambert Agreements are template documents freely available from the Intellectual Property offices web site. This is much like saying, you can sell the ambulance design but my health board gets some revenue back from each deal.

Over and above the clinical risk of bringing AI into use there is a cost risk. The principle cost risk being the time and effort associated with bringing a NICE Tier 1-3 solution to bare within a pathway. For a NICE DHT Tier 1 service this can be around £0.5 million, for a tier 2-3 product this could be between £2-5 million.

AI Portfolio Monitoring

AI Portfolio Monitoring is in essence a job checklist. It guides you to decide what jobs to do first. It is a good idea to have a set of criterial for assessing the risk of an ambulance failing. So it is with AI. Below is the CarefulAI Criteria we use with NHS customers, to prioritise AI jobs. The riskier the status, the more important it is to address the AI issue. A direct analogy is a MOT. If your vehicle is at risk. It will fail its MOT. If a vehicle has some concerns to a MOT assessor, they will list a series of advisories in your MOT assessment. With the CarefulAI Criteria the main difference is that customers and staff are encouraged to report an AI system's risk status. This is akin to asking an ambulance driver or paramedic to assess their vehicle for its potential MOT status.

The CarefulAI Criteria are shown below with real examples (and AI System names anonymised)

Models of Adoption

Models for adopting AI in the NHS vary. In the main. It tends to happen by stealth. An AI supplier will propose a non AI system. They then gather user data and systems data. They draw insights and then sell those insights back to the NHS as a service. This is akin to having a speed monitoring system on your ambulance that monitors how fast you go on/off a 'blue light' . Then having the data sold to the ambulance service as insight. It tends to go down badly.

Another model of adoption, we call the 2022 framework. In it the NHS (the AI Funder) is prepared to engage us, and accepts certain roles with respect to management of the AI development process. This is akin to an ambulance station taking control of a new vehicle fully aware of its value and maintenance schedule. In this framework AI Users (typically NHS staff and sometimes the public) have a range of roles. Most importantly they (not just NHS management) understand the value of the AI they are involved with developing, and manage its deployment and continuous monitoring. In our model they can also switch the AI we develop off. This is much like giving a paramedic the power to say wether they will or will not travel in the ambulance given to them. A key role as an AI supplier is to be transparent about the management and protection of data. Working with other suppliers that use the data and insights we use and generate. Making sure that a data protection and GDPR statement is in place before we begin and AI development and and ensuring it is kept up to date. Guiding the NHS buyers and Users through compliance procedures, and reducing the technical risks and associated costs arising with using systems and methods the AI we develop depends upon. In practice this role is akin to an ambulance supplier posting engineering staff to your station and working with your teams to make sure their vehicles are fit for purpose, and nasty surprises are minimised

On the subject of nasty surprises. What are they I hear you ask. Well. They can be manyfold. Would you believe it. Some people think AI is a humanoid looking robot, and they fear putting their lives in the hands of such creatures! It is often better to describe AI as what it is e.g., computerised advanced guessing, which is often linked to a computerised system or systems used to deliver help in a care pathway. It is much like the ABS that stops an ambulance from skidding when the driver breaks hard.

Other nasty surprises occur when the AI gets something wrong (and they always will) and you cannot switch it off, or adapt it without paying lots of money. This is often in the NHS's direct control. It is better to buy AI under a service KPI than a product under a long term licence. To think of AI as a system that can and should change over time is wise, the longer one uses AI the wiser it should become. But much like people, this is not always the case. So contracting with AI suppliers like one would do with short term contract labour (with well defined KPIs) is good practice.

Another model of adoption, we call the 2022 framework. In it the NHS (the AI Funder) is prepared to engage us, and accepts certain roles with respect to management of the AI development process. This is akin to an ambulance station taking control of a new vehicle fully aware of its value and maintenance schedule. In this framework AI Users (typically NHS staff and sometimes the public) have a range of roles. Most importantly they (not just NHS management) understand the value of the AI they are involved with developing, and manage its deployment and continuous monitoring. In our model they can also switch the AI we develop off. This is much like giving a paramedic the power to say wether they will or will not travel in the ambulance given to them. A key role as an AI supplier is to be transparent about the management and protection of data. Working with other suppliers that use the data and insights we use and generate. Making sure that a data protection and GDPR statement is in place before we begin and AI development and and ensuring it is kept up to date. Guiding the NHS buyers and Users through compliance procedures, and reducing the technical risks and associated costs arising with using systems and methods the AI we develop depends upon. In practice this role is akin to an ambulance supplier posting engineering staff to your station and working with your teams to make sure their vehicles are fit for purpose, and nasty surprises are minimised

On the subject of nasty surprises. What are they I hear you ask. Well. They can be manyfold. Would you believe it. Some people think AI is a humanoid looking robot, and they fear putting their lives in the hands of such creatures! It is often better to describe AI as what it is e.g., computerised advanced guessing, which is often linked to a computerised system or systems used to deliver help in a care pathway. It is much like the ABS that stops an ambulance from skidding when the driver breaks hard.

Other nasty surprises occur when the AI gets something wrong (and they always will) and you cannot switch it off, or adapt it without paying lots of money. This is often in the NHS's direct control. It is better to buy AI under a service KPI than a product under a long term licence. To think of AI as a system that can and should change over time is wise, the longer one uses AI the wiser it should become. But much like people, this is not always the case. So contracting with AI suppliers like one would do with short term contract labour (with well defined KPIs) is good practice.

Empathising with the Issues Facing People

To be a good manager of an ambulance station you need to have an understanding of the role and responsibilities of people and good practice. As and when you come across people involved in the design, development and implementation of AI the following descriptions of personas may help you understand what people need to understand, and the issues they face

Staff and Patients

Understanding the value of AI

It is useful to think of AI as technology that can be used to classify or predict outcomes e.g., cancer as shown on an Xray, or the need to send a person a reminder. When you understand how wrong you can be in that prediction or classification, you are better able to understand the value of AI in your work.

Once you have answered these questions, you need to ask yourself, what data have I got access to that will enable me to come to that conclusion? This is because AI is not an artificial intelligence, it is a set of techniques to look at data and change the way in which systems operate based on that data.

For example, using cancer identification on x-ray as an example, AI will analyse the pixels in the image and come to a conclusion on what it represents based on what it has been trained to identify.

The Importance of Data

Development of AI is fully dependent on data. Gathering data that enables AI to make predictions or classifications of a model that is fit for purpose is a highly governed area. The data protection act and GDPR place significant restrictions on what data can be used and for what purpose. As a consequence, before embarking on use, research or development, it is wise to start with an understanding about the implications and impact of AI on a user case.

The information governance teams who act as guardians of public data will want to consider evidence in data protection impact assessments (DPIAs)and compliance with the Data Protection Act, GDPR and ICO guidelines.

How do you train AI to classify or Predict

The answer is that you give a data scientist access to information. With that data, a scientist and their computer program will plot the features of the data and come to a conclusion as to what this means or predicts.

This is very much like plotting data on a graph and and making predictions from the graph when you have one set of data and aim to use it to draw a conclusion from the graph. How wrong you can afford to be when drawing a line identifies how wrong you can afford to be with your prediction or classification.

In the example of the cancer identifier, you would need a high level of confidence that the data accurately represents 'probably cancer' or 'probably not cancer'. The consequences and potential harm resulting from getting this wrong are significant compared to a different analysis used to predict for example whether or not an individual is likely to attend a seminar.

Where is AI technology in Wales

How AI is deployed

It is common practice that AI technology is deployed within hardware and software. Increasingly it is deployed as a service. Users of the technology are given license to use it under terms. Consideration of the terms is important particularly where the technology can adapt itself based on data it is given access to.

Licensing AI’s use

Within the health and social care system of the United Kingdom a common practice is to require that purchases of technology provided are within an open-source license. This ensures that users are not locked into particular technologies, and the support and development thereof. This said your ability to do this will very much depend upon whether you have the ability to influence how AI is made. If an organisation is providing data to train AI it is in a position to influence the terms of the AI license.

It is useful to present AI in non technical terms. One method is to present it within the themes that underpin health and social care service delivery. For example, below AI developments are set against the Welsh Care Quality Framework.

Theme 1: Person Centred Care

Person centred care refers to a process that is people focused, promotes independence and autonomy, provides choice and control and is based on a collaborative team philosophy.

It is possible that AI could be used to understand people’s needs and views and help build relationships with family members e.g., via voice interfaces. Humans and therefore AI can be trained to put such information accurately into context by comparison to holistic, spiritual, pastoral and religious dimensions. As a consequence, it is important that feedback be gathered from service users and staff on their confidence in the support provided by an AI system.

Theme: Staying Healthy

The principle of staying healthy is to ensure that people in Wales are well informed to manage their own health and wellbeing. In the main it is assumed that staff’s role is to nudge notions of good human behaviour by protecting people from harming themselves or by making them aware of good lifestyle choices. The care system is particularly interested in inequalities amongst communities which subsequently place a high demand on the public care system.

AI is being deployed to nudge human behaviours that have a good effect on the length and quality of human life such as smoking cessation, regular activity and exercise, socialisation, education etc. This could be manifested as nudges staff provide to people based on service users self-reported activity, or from automated nudges that arise from electronic devices service users have access to e.g., smart watches and phones.

At this time ‘wellbeing advice’ from staff and AI solutions is an un-regulated field. In Wales, healthcare staff aim to deliver clinical care that is pertinent to their speciality as well as advising service users and signposting them to support that encourages positive health behaviours. This is embodied in the technique of 'making every contact count'. It is therefore appropriate to suggest that reports on any AI system's fitness for purpose accounts for the frequency at which wellbeing advice is provided at the same time as focussed clinical care and advice.

Theme: Safe care

The principle of safe care is to ensure that people in Wales are protected from harm and supported to protect themselves from known harm. In practice the people who are best placed to advise of known harm are clinical and technical people who have had Safety Officer Training. In UK law AI system design and implementation needs to have an associated clinical/technical safety plan and hazard log (e.g., DCB0129 and DCB0160). System suppliers are responsible for DCB0129, and system users are responsible for DCB0160. The mitigations in a risk register should be manifested is systems design or training material.

If a system is making decisions with no person in the decision-making loop it is likely to be classed as a medical device. In this case users should expect such a device to have evidence of compliance with UK MHRA and potentially UK MDR. The process required to demonstrate evidence of compliance is lengthy and can take between 2 and 5 years.

MHRA and MDR compliance are not sufficient as stand-alone assurances of safety. The fitness for purpose for users and clinical situations still needs to be evaluated by health and social care practitioners and should not be overlooked.

Theme: Effective Care

The principle of effective care is that people receive the right care and support as locally as possible and are enabled to contribute to making that care successful.

People have a right not to be subject to a decision based solely on automated processing (ref Article 22 of GDPR) so it is wise to ask if they wish AI to make a decision about their care before using it.

AI systems systems that automate the storage of information or dissemination of clinical information (e.g., voice recognition systems) can be expected. The business cases to support service users within their homes, in a timely manner, and remotely will be compelling.

Theme: Dignified care

The principle of dignified care is that the people in Wales are treated with dignity and respect and treat others the same. Fundamental human rights to dignity, privacy and informed choice must be protected at all times, and the care provided must take account of the individual’s needs, abilities and wishes.

One of the main features of this standard is that staff and service user's voice is heard. Service users have, on average, two generations of families who have grown up with human-to-human interaction and advice and see this as the norm, however, there has been an accelerating trend of people taking advice from search engines and via video. Like people, AI systems can be trained to undertake active listening, motivational interviewing, and deliver advice with compassion and empathy.

AI methods to automatically understand the empathy rapport and trust within such interactions are being developed.

Theme: Timely Care

To ensure the best possible outcome, people’s conditions should be diagnosed promptly and treated according to clinical need. The timely provision of appropriate advice can have a huge impact on service user’s health outcomes. AI systems are being deployed to give service users faster access to care service e.g., automated self-booking systems and triage systems.

Theme: Individual care

The principle of individual care is that people are treated as individuals, reflecting their own needs and responsibilities. This is manifested by staff respecting service user’s needs and wishes, or those of their chosen advocates, and in the provision of support when people have access issues e.g., sensory impairment, disability etc.

AI that understands the needs of individuals can be as simple as mobile phone technology that knows when people are near the phone and can be contacted or translates one language into another. In the main, this class of AI interprets a service users’ needs and represents them. Such AI can be provided by a service e.g., a booking system, or the service user e.g., communication app or an automated personal health record.

Theme: Staff and resources

The principle is that people in Wales can find information about how their NHS is resourced and make effective use of resources. This goes beyond clinical and technical safety, governance and leadership, it extends to providing staff a with sense of agency to improve services. A health service must determine the workforce requirements to deliver high quality safe care and support. As discussed in the Topol Review a better understanding within staff of the strengths and weaknesses of AI is an issue that needs to be addressed.

One of the key challenges when clinicians face the prospect of using AI is what to focus resources on. Too often they are faced with AI purchasing decisions with no framework to understand the value, fitness for purpose or time to market issues associated with AI. F Supporting staff to make decisions that synthesise service development requirements identified through co-production between health care teams and service users with evidence informed AI application has the best potential to optimise resources.

It is useful to think of AI as technology that can be used to classify or predict outcomes e.g., cancer as shown on an Xray, or the need to send a person a reminder. When you understand how wrong you can be in that prediction or classification, you are better able to understand the value of AI in your work.

Once you have answered these questions, you need to ask yourself, what data have I got access to that will enable me to come to that conclusion? This is because AI is not an artificial intelligence, it is a set of techniques to look at data and change the way in which systems operate based on that data.

For example, using cancer identification on x-ray as an example, AI will analyse the pixels in the image and come to a conclusion on what it represents based on what it has been trained to identify.

The Importance of Data

Development of AI is fully dependent on data. Gathering data that enables AI to make predictions or classifications of a model that is fit for purpose is a highly governed area. The data protection act and GDPR place significant restrictions on what data can be used and for what purpose. As a consequence, before embarking on use, research or development, it is wise to start with an understanding about the implications and impact of AI on a user case.

The information governance teams who act as guardians of public data will want to consider evidence in data protection impact assessments (DPIAs)and compliance with the Data Protection Act, GDPR and ICO guidelines.

How do you train AI to classify or Predict

The answer is that you give a data scientist access to information. With that data, a scientist and their computer program will plot the features of the data and come to a conclusion as to what this means or predicts.

This is very much like plotting data on a graph and and making predictions from the graph when you have one set of data and aim to use it to draw a conclusion from the graph. How wrong you can afford to be when drawing a line identifies how wrong you can afford to be with your prediction or classification.

In the example of the cancer identifier, you would need a high level of confidence that the data accurately represents 'probably cancer' or 'probably not cancer'. The consequences and potential harm resulting from getting this wrong are significant compared to a different analysis used to predict for example whether or not an individual is likely to attend a seminar.

Where is AI technology in Wales

- 'NudgeShare' is a technology to promote self-care. It uses local differential privacy and on phone machine learning to nudge app users towards self-care behaviours and to pro-actively seek regular contact with people in their care network.

- AutoFAQ, is a technology that can ingest audio recordings and enable ICO registered information processes to cluster and visualise the demand for GP services using NLP and NLU

- LineSafe is an automated system for ensuring that a Nasogastric tube is in the correct and safe anatomical position

- RiTTA is a text chatbot. It provides real time answers to common questions at any time of the day or night. Importantly, these are conversations, with tailored answers, not just a directory of information.

- MineAct is a technology that identifies cognitive dissonance in a young person’s Minecraft chat and instigates Acceptance and Commitment Therapy via a text chat interface.

- Amazon Alexa, when used in an acute care setting can suggest drug dosages to unidentified users

- IBM Watson, when used to recommend actions that have a clinical outcome for COVID.

How AI is deployed

It is common practice that AI technology is deployed within hardware and software. Increasingly it is deployed as a service. Users of the technology are given license to use it under terms. Consideration of the terms is important particularly where the technology can adapt itself based on data it is given access to.

Licensing AI’s use

Within the health and social care system of the United Kingdom a common practice is to require that purchases of technology provided are within an open-source license. This ensures that users are not locked into particular technologies, and the support and development thereof. This said your ability to do this will very much depend upon whether you have the ability to influence how AI is made. If an organisation is providing data to train AI it is in a position to influence the terms of the AI license.

It is useful to present AI in non technical terms. One method is to present it within the themes that underpin health and social care service delivery. For example, below AI developments are set against the Welsh Care Quality Framework.

Theme 1: Person Centred Care

Person centred care refers to a process that is people focused, promotes independence and autonomy, provides choice and control and is based on a collaborative team philosophy.

It is possible that AI could be used to understand people’s needs and views and help build relationships with family members e.g., via voice interfaces. Humans and therefore AI can be trained to put such information accurately into context by comparison to holistic, spiritual, pastoral and religious dimensions. As a consequence, it is important that feedback be gathered from service users and staff on their confidence in the support provided by an AI system.

Theme: Staying Healthy

The principle of staying healthy is to ensure that people in Wales are well informed to manage their own health and wellbeing. In the main it is assumed that staff’s role is to nudge notions of good human behaviour by protecting people from harming themselves or by making them aware of good lifestyle choices. The care system is particularly interested in inequalities amongst communities which subsequently place a high demand on the public care system.

AI is being deployed to nudge human behaviours that have a good effect on the length and quality of human life such as smoking cessation, regular activity and exercise, socialisation, education etc. This could be manifested as nudges staff provide to people based on service users self-reported activity, or from automated nudges that arise from electronic devices service users have access to e.g., smart watches and phones.

At this time ‘wellbeing advice’ from staff and AI solutions is an un-regulated field. In Wales, healthcare staff aim to deliver clinical care that is pertinent to their speciality as well as advising service users and signposting them to support that encourages positive health behaviours. This is embodied in the technique of 'making every contact count'. It is therefore appropriate to suggest that reports on any AI system's fitness for purpose accounts for the frequency at which wellbeing advice is provided at the same time as focussed clinical care and advice.

Theme: Safe care

The principle of safe care is to ensure that people in Wales are protected from harm and supported to protect themselves from known harm. In practice the people who are best placed to advise of known harm are clinical and technical people who have had Safety Officer Training. In UK law AI system design and implementation needs to have an associated clinical/technical safety plan and hazard log (e.g., DCB0129 and DCB0160). System suppliers are responsible for DCB0129, and system users are responsible for DCB0160. The mitigations in a risk register should be manifested is systems design or training material.

If a system is making decisions with no person in the decision-making loop it is likely to be classed as a medical device. In this case users should expect such a device to have evidence of compliance with UK MHRA and potentially UK MDR. The process required to demonstrate evidence of compliance is lengthy and can take between 2 and 5 years.

MHRA and MDR compliance are not sufficient as stand-alone assurances of safety. The fitness for purpose for users and clinical situations still needs to be evaluated by health and social care practitioners and should not be overlooked.

Theme: Effective Care

The principle of effective care is that people receive the right care and support as locally as possible and are enabled to contribute to making that care successful.

People have a right not to be subject to a decision based solely on automated processing (ref Article 22 of GDPR) so it is wise to ask if they wish AI to make a decision about their care before using it.

AI systems systems that automate the storage of information or dissemination of clinical information (e.g., voice recognition systems) can be expected. The business cases to support service users within their homes, in a timely manner, and remotely will be compelling.

Theme: Dignified care

The principle of dignified care is that the people in Wales are treated with dignity and respect and treat others the same. Fundamental human rights to dignity, privacy and informed choice must be protected at all times, and the care provided must take account of the individual’s needs, abilities and wishes.

One of the main features of this standard is that staff and service user's voice is heard. Service users have, on average, two generations of families who have grown up with human-to-human interaction and advice and see this as the norm, however, there has been an accelerating trend of people taking advice from search engines and via video. Like people, AI systems can be trained to undertake active listening, motivational interviewing, and deliver advice with compassion and empathy.

AI methods to automatically understand the empathy rapport and trust within such interactions are being developed.

Theme: Timely Care

To ensure the best possible outcome, people’s conditions should be diagnosed promptly and treated according to clinical need. The timely provision of appropriate advice can have a huge impact on service user’s health outcomes. AI systems are being deployed to give service users faster access to care service e.g., automated self-booking systems and triage systems.

Theme: Individual care

The principle of individual care is that people are treated as individuals, reflecting their own needs and responsibilities. This is manifested by staff respecting service user’s needs and wishes, or those of their chosen advocates, and in the provision of support when people have access issues e.g., sensory impairment, disability etc.

AI that understands the needs of individuals can be as simple as mobile phone technology that knows when people are near the phone and can be contacted or translates one language into another. In the main, this class of AI interprets a service users’ needs and represents them. Such AI can be provided by a service e.g., a booking system, or the service user e.g., communication app or an automated personal health record.

Theme: Staff and resources

The principle is that people in Wales can find information about how their NHS is resourced and make effective use of resources. This goes beyond clinical and technical safety, governance and leadership, it extends to providing staff a with sense of agency to improve services. A health service must determine the workforce requirements to deliver high quality safe care and support. As discussed in the Topol Review a better understanding within staff of the strengths and weaknesses of AI is an issue that needs to be addressed.

One of the key challenges when clinicians face the prospect of using AI is what to focus resources on. Too often they are faced with AI purchasing decisions with no framework to understand the value, fitness for purpose or time to market issues associated with AI. F Supporting staff to make decisions that synthesise service development requirements identified through co-production between health care teams and service users with evidence informed AI application has the best potential to optimise resources.

Funders and Data Guardians

Governing AI.

Classifiers and prediction tools are usually embedded in hardware and software used in health and social care. The providers of that hardware and software are governed under two practices, contract and health guidance / law. In the UK this guidance is provided by NICE and MHRA. The permitted margin for error for a classifier / prediction algorithm within a situation is in the hands of the supplier who uses the algorithm and the contract they have with the NHS.

In situations where such AI technologies do not have a clinical outcome (for example in booking people onto a training course) the risk of harm is low. This means that the health and social care system does not try and police the development and implementation of such technologies. Conversely, where the potential for risk to humans is high, the policing is rigorous.

Depending upon where the risk to humans exists, this policing comes in the form of requirements to adhere to standards and independent assessment. In some cases, this mandates the provision of extensive evidence and research to support compliance with government agency guidance or law. The governance of standard adherence is nation specific. For example, AI technology that complies with UK regulation cannot automatically claim readiness for deployment in the United States and vice versa.

Important questions about AI technology to consider include:

How much error or inaccuracy is acceptable? (How wrong can you afford to be?)

What are the possible consequences of inaccuracies or error?

What mitigating steps can be taken to reduce the impact or seriousness of any acceptable error?

What evidence is necessary to demonstrate that the AI is fit for purpose?

In the United Kingdom NICE guidelines on evidence are a very good way of understanding what information is needed based on the purpose of the AI you are faced with. To start with it is good to place the AI technology on an evidenced needed Tier, based on its purpose.

The role of the Care Quality Commission

If an AI supplier is providing software as a service, and that service is a clinical service it is most likely that they will need to register with the care quality commission. A directory of registered suppliers is available.

How funding sources effect AI deployment

For staff and clinicians involved in the health and social care sector, it is recommended that they take an interest in how potential AI is being funded. Diverse funding streams are associated with a range of advantages and disadvantages. Government agencies expect long-term returns on public money invested. Businesses can have the same expectation. However, firms often require a return on investment within two years in the form of profitable income. Venture capitalists have much higher expectations, this can be a 10x return on their investment within two years.

With AI solutions that can fit into the NICE Tier 1 evidence framework the evidence of fitness for purpose can be self-reported. There is no need for independent verification of fitness for purpose. These types of applications attract all types of funding as the return on investment can be within one year.

Where more evidence is needed, the longer the research and development process for a particular type of AI is. Lengthy development processes are dependent on more significant funds . Developments in AI that require evidence in Tier 2+ tend to be funded by University research and development grants. When the evidence they provide enables the private sector to deploy a solution within around one year it will attract private sector funding.

Unlocking IP

To ensure that private sector funding does not lock Health and social care organisations out of other potential developments, it is important to have a formal agreement with private sector funders. Effective agreements are generally associated with the standard operating procedures of hosts in the health and social care sector. However most do not have access to guidance on managing associated intellectual property. In this case it is useful to make reference to Lambert agreements on the IPO web site.

The choice of agreement will depend upon the board within an organisation. Many AI developments are conducted on the Lambert Agreement 4.

Where to Build AI Models

Significant public monies are spent trying to bring data into large data resources. In practice this is a very difficult task and assumes that models need to be created centrally and distributed nationally. This assumption needs to be tested when in fact it is often acceptable to build models locally and deploy them nationally. This technique of federated machine learning has a number of advantages for information governance, not least that data does not need to leave the boundaries of a health board's firewall to be useful in the development of AI.

Classifiers and prediction tools are usually embedded in hardware and software used in health and social care. The providers of that hardware and software are governed under two practices, contract and health guidance / law. In the UK this guidance is provided by NICE and MHRA. The permitted margin for error for a classifier / prediction algorithm within a situation is in the hands of the supplier who uses the algorithm and the contract they have with the NHS.

In situations where such AI technologies do not have a clinical outcome (for example in booking people onto a training course) the risk of harm is low. This means that the health and social care system does not try and police the development and implementation of such technologies. Conversely, where the potential for risk to humans is high, the policing is rigorous.

Depending upon where the risk to humans exists, this policing comes in the form of requirements to adhere to standards and independent assessment. In some cases, this mandates the provision of extensive evidence and research to support compliance with government agency guidance or law. The governance of standard adherence is nation specific. For example, AI technology that complies with UK regulation cannot automatically claim readiness for deployment in the United States and vice versa.

Important questions about AI technology to consider include:

How much error or inaccuracy is acceptable? (How wrong can you afford to be?)

What are the possible consequences of inaccuracies or error?

What mitigating steps can be taken to reduce the impact or seriousness of any acceptable error?

What evidence is necessary to demonstrate that the AI is fit for purpose?

In the United Kingdom NICE guidelines on evidence are a very good way of understanding what information is needed based on the purpose of the AI you are faced with. To start with it is good to place the AI technology on an evidenced needed Tier, based on its purpose.

The role of the Care Quality Commission

If an AI supplier is providing software as a service, and that service is a clinical service it is most likely that they will need to register with the care quality commission. A directory of registered suppliers is available.

How funding sources effect AI deployment

For staff and clinicians involved in the health and social care sector, it is recommended that they take an interest in how potential AI is being funded. Diverse funding streams are associated with a range of advantages and disadvantages. Government agencies expect long-term returns on public money invested. Businesses can have the same expectation. However, firms often require a return on investment within two years in the form of profitable income. Venture capitalists have much higher expectations, this can be a 10x return on their investment within two years.

With AI solutions that can fit into the NICE Tier 1 evidence framework the evidence of fitness for purpose can be self-reported. There is no need for independent verification of fitness for purpose. These types of applications attract all types of funding as the return on investment can be within one year.

Where more evidence is needed, the longer the research and development process for a particular type of AI is. Lengthy development processes are dependent on more significant funds . Developments in AI that require evidence in Tier 2+ tend to be funded by University research and development grants. When the evidence they provide enables the private sector to deploy a solution within around one year it will attract private sector funding.

Unlocking IP

To ensure that private sector funding does not lock Health and social care organisations out of other potential developments, it is important to have a formal agreement with private sector funders. Effective agreements are generally associated with the standard operating procedures of hosts in the health and social care sector. However most do not have access to guidance on managing associated intellectual property. In this case it is useful to make reference to Lambert agreements on the IPO web site.

The choice of agreement will depend upon the board within an organisation. Many AI developments are conducted on the Lambert Agreement 4.

Where to Build AI Models

Significant public monies are spent trying to bring data into large data resources. In practice this is a very difficult task and assumes that models need to be created centrally and distributed nationally. This assumption needs to be tested when in fact it is often acceptable to build models locally and deploy them nationally. This technique of federated machine learning has a number of advantages for information governance, not least that data does not need to leave the boundaries of a health board's firewall to be useful in the development of AI.

Risk Managers

Risk and AI

When dealing with health and social care there is a duty of care on staff to be conscious of the risks associated with treating and not treating a condition. In essence if you were to multiply how wrong you can afford to be, by the risk of harm, then you understand how important it is that your classifier or prediction needs to be correct.

The Role of Clinical Safety Officers

An effective way of being able to understand the impact of technologies on clinicians, users and clinical practice is to comply with and self-certify against the Data Coordination Boards standards using Clinical Safety Officers. In particular, DCB0129 and DCB0160.

The reason for these standards being useful is that they address the subject of risk of harm and the mitigation of it. In the consideration of risk of harm, AI users find themselves considering the implications of system design on any person or persons that may be affected by it. This opens up conversations about use of data, inferences, how they are created and the implications of making decisions relating to AI use. If a supplier is to self-certify against DCB0160 they need to follow procedures which are very similar to DCB0129

In the absence of a CE mark both of these Standards are helpful. It could be argued that even with the existence of a CE Mark, evidence of an AI supplier and user using both of these standards illustrates a commitment to the safe use of AI in health and social care. In UK law they are a mandatory requirement of the design, provision and monitoring of an IT system within the health and social care setting

To self-certify against DCB 0129 / 0160 a Clinical Safety Officer needs to be in place. Clinical safety officers are clinicians who have been trained in the effective implementation of these standards. The cost of such training is very low.

The value of a CE mark

If a product has CE marking understanding its intended use is very important. The duty of care of how technology is used in health and social care sits with the user, not the supplier. It is therefore important not to assume that because a supplier has a CE mark that this technology is certified for the purpose you wish to apply it to. Firms that carry the CE marking, and for what purpose, will often be found on the MHRA web site

When dealing with health and social care there is a duty of care on staff to be conscious of the risks associated with treating and not treating a condition. In essence if you were to multiply how wrong you can afford to be, by the risk of harm, then you understand how important it is that your classifier or prediction needs to be correct.

The Role of Clinical Safety Officers

An effective way of being able to understand the impact of technologies on clinicians, users and clinical practice is to comply with and self-certify against the Data Coordination Boards standards using Clinical Safety Officers. In particular, DCB0129 and DCB0160.

The reason for these standards being useful is that they address the subject of risk of harm and the mitigation of it. In the consideration of risk of harm, AI users find themselves considering the implications of system design on any person or persons that may be affected by it. This opens up conversations about use of data, inferences, how they are created and the implications of making decisions relating to AI use. If a supplier is to self-certify against DCB0160 they need to follow procedures which are very similar to DCB0129

In the absence of a CE mark both of these Standards are helpful. It could be argued that even with the existence of a CE Mark, evidence of an AI supplier and user using both of these standards illustrates a commitment to the safe use of AI in health and social care. In UK law they are a mandatory requirement of the design, provision and monitoring of an IT system within the health and social care setting

To self-certify against DCB 0129 / 0160 a Clinical Safety Officer needs to be in place. Clinical safety officers are clinicians who have been trained in the effective implementation of these standards. The cost of such training is very low.

The value of a CE mark

If a product has CE marking understanding its intended use is very important. The duty of care of how technology is used in health and social care sits with the user, not the supplier. It is therefore important not to assume that because a supplier has a CE mark that this technology is certified for the purpose you wish to apply it to. Firms that carry the CE marking, and for what purpose, will often be found on the MHRA web site

Technical Teams

Data Classification and Protection

Data about people and their lives has never been more readily available. Historically people could only analyse coded data (e.g. Diagnoses, Symptoms, Test & Procedures Results), nowadays they can easily also cross reference this with patients reported data. Data on people's lives, clinical and social encounters, family and work history and genetics is now possible. These data sources can be analysed to predict health and social care trends and individual outcomes. Data needs to be classed and protected as a valuable asset.

Speeding up Life Science, Biomedical and Service Analytics

AI has the potential to accelerate academic research and optimise scientific process. The ability to swiftly analyse and visualise biomedical data is improving triage and decision making. Swifter sharing of this data has made pan regional decision making more effective. Coupled with developments in cyber security, data sharing inside and outside of health and social care has become easier and safer. The ease and speed with which one can obtain and analyse data and draw insights improves management of service and health outcomes. Pathway management and the systems within it create a myriad of data sources that can be used to improved the efficient, safe, and compliant delivery and development of health and social care services

People in the AI Value Chain

AI is dependent upon mathematics, statistics, computer science, computing power and a human's will to learn. Humans involved in AI have many titles and skills. They vary from:

- Front line workers (nurses, doctors, allied health professionals, administrative staff etc) who gather, analyse, and control data relating to human interactions and use of management systems

- Life Scientists who manage and research laboratory facility and clinical data

- Epidemiologists & statisticians who look at public health

- Data scientists who look at data analytics, modelling and systems analysis

- Technology experts skilled in developing and implementing electronic patient/hospital records systems and specific data types (images, text, coded data, voice etc)

- Management who use methodologies and communication tools to engender system use

Optimising Organisational Outcomes

The NHS and social care sector has over 1.5 million staff each with their own systems. The myriad of providers and programmes means that data is heavily siloed. In practice few have data of enough quantity and quality to make centralised AI systems a realistic option. The potential to do federated AI development is significant. The opportunity to integrate data AI model development is therefore a current realistic challenge.

The opportunities to improve: workflows, predictive analytics, visualisations, data search, data tracking, curation of data and model development and deployment is apparent. All of which could improve efficiency and effectiveness of existing and arising health and social care outcomes. The opportunities to manage resources flexibly, dynamically and in real time will increase using AI.

An expanded data and computer science service

This will require the increased use of containerisation of AI model and pathway systems, the development of micro services, cyber security systems, image processing, natural language processing , voice model development and curation, graphical processing, web development etc. The delivery of these using agile techniques by people trained to understand complex and black box algorithms will be necessary on a variety of data sources. It will be increasingly necessary to track data from source, re-use and cross reference health and public domain data, create and curate ground truth data for pathways, and generate and track insights as far as they affect pathways.

Developments in data analysis & the importance of data stewardship

The health and social care system has historically relied upon clinical trial and survey data. Increasingly it is using structured electronic health record data to predict and classify health outcomes. Due to the increasing accuracy and commoditisation of natural language tools, linking structured data to inferences from text using text analytics will become widespread. Cross referencing these with dispensing records and service funding planning will increase the capability of value based healthcare analysis.

Cross referencing such data with analysis of patient discussions has the potential to improve the understanding about the effectiveness of interactions, and opportunities for more effective triage. The move towards cross-referencing health data to real world social data (wearable etc) is common practice in private healthcare economies and can be expected to gain momentum in the UK. Sensitive data stewardship and privacy protecting techniques will be increasingly demanded by government agencies tasked with information governance. Increased due dilligence around the use of online monitoring can be expected, particularly when techniques used in the advertising sector for population and retail consumer analysis become widespread in the monitoring of prescription compliance and dispensing. The formal assurance of methods and techniques in this domain can be expected when they affect services that may have a clinical outcome.

The changing world of bioinformatics

The cost of generating and analysing biological data has significantly reduced. The ability to scale and manage data often sits in research establishments and universities who have government funded state of the art infrastructures, cloud computing, security testing and students to analyse health and social care themes. The opportunities for local clinicians and carers to direct how data is analysed, tagged, structured for local health outcomes is clear. In Wales this has manifested itself in the local data analytics groups within the National Data Repository project

Data about people and their lives has never been more readily available. Historically people could only analyse coded data (e.g. Diagnoses, Symptoms, Test & Procedures Results), nowadays they can easily also cross reference this with patients reported data. Data on people's lives, clinical and social encounters, family and work history and genetics is now possible. These data sources can be analysed to predict health and social care trends and individual outcomes. Data needs to be classed and protected as a valuable asset.

Speeding up Life Science, Biomedical and Service Analytics

AI has the potential to accelerate academic research and optimise scientific process. The ability to swiftly analyse and visualise biomedical data is improving triage and decision making. Swifter sharing of this data has made pan regional decision making more effective. Coupled with developments in cyber security, data sharing inside and outside of health and social care has become easier and safer. The ease and speed with which one can obtain and analyse data and draw insights improves management of service and health outcomes. Pathway management and the systems within it create a myriad of data sources that can be used to improved the efficient, safe, and compliant delivery and development of health and social care services

People in the AI Value Chain

AI is dependent upon mathematics, statistics, computer science, computing power and a human's will to learn. Humans involved in AI have many titles and skills. They vary from:

- Front line workers (nurses, doctors, allied health professionals, administrative staff etc) who gather, analyse, and control data relating to human interactions and use of management systems

- Life Scientists who manage and research laboratory facility and clinical data

- Epidemiologists & statisticians who look at public health

- Data scientists who look at data analytics, modelling and systems analysis

- Technology experts skilled in developing and implementing electronic patient/hospital records systems and specific data types (images, text, coded data, voice etc)

- Management who use methodologies and communication tools to engender system use

Optimising Organisational Outcomes

The NHS and social care sector has over 1.5 million staff each with their own systems. The myriad of providers and programmes means that data is heavily siloed. In practice few have data of enough quantity and quality to make centralised AI systems a realistic option. The potential to do federated AI development is significant. The opportunity to integrate data AI model development is therefore a current realistic challenge.

The opportunities to improve: workflows, predictive analytics, visualisations, data search, data tracking, curation of data and model development and deployment is apparent. All of which could improve efficiency and effectiveness of existing and arising health and social care outcomes. The opportunities to manage resources flexibly, dynamically and in real time will increase using AI.

An expanded data and computer science service

This will require the increased use of containerisation of AI model and pathway systems, the development of micro services, cyber security systems, image processing, natural language processing , voice model development and curation, graphical processing, web development etc. The delivery of these using agile techniques by people trained to understand complex and black box algorithms will be necessary on a variety of data sources. It will be increasingly necessary to track data from source, re-use and cross reference health and public domain data, create and curate ground truth data for pathways, and generate and track insights as far as they affect pathways.

Developments in data analysis & the importance of data stewardship

The health and social care system has historically relied upon clinical trial and survey data. Increasingly it is using structured electronic health record data to predict and classify health outcomes. Due to the increasing accuracy and commoditisation of natural language tools, linking structured data to inferences from text using text analytics will become widespread. Cross referencing these with dispensing records and service funding planning will increase the capability of value based healthcare analysis.

Cross referencing such data with analysis of patient discussions has the potential to improve the understanding about the effectiveness of interactions, and opportunities for more effective triage. The move towards cross-referencing health data to real world social data (wearable etc) is common practice in private healthcare economies and can be expected to gain momentum in the UK. Sensitive data stewardship and privacy protecting techniques will be increasingly demanded by government agencies tasked with information governance. Increased due dilligence around the use of online monitoring can be expected, particularly when techniques used in the advertising sector for population and retail consumer analysis become widespread in the monitoring of prescription compliance and dispensing. The formal assurance of methods and techniques in this domain can be expected when they affect services that may have a clinical outcome.

The changing world of bioinformatics

The cost of generating and analysing biological data has significantly reduced. The ability to scale and manage data often sits in research establishments and universities who have government funded state of the art infrastructures, cloud computing, security testing and students to analyse health and social care themes. The opportunities for local clinicians and carers to direct how data is analysed, tagged, structured for local health outcomes is clear. In Wales this has manifested itself in the local data analytics groups within the National Data Repository project

Researchers

The role of IRAS and HRA

On occasions when the deployment of a tier 2+ AI is expected it is most likely that formal research will be required. This often takes the place of local trials. To ensure that the success and failure of such trials can be effectively reported it is worthwhile registering trials with IRAS and HRA. Registrations are a requirement of academic research applications and coverage within publications within the health and social care space.

When to start evidence gathering for MHRA

A challenge at this time is the paucity of authorised bodies able to independently show compliance against medical device legislation. Suppliers of AI technology can expect to wait over a year to gain access to a notarised body capable of confirming compliance. If a supplier of AI technology waits till such a body is available to begin gathering evidence of appropriateness, the time it takes to bring technology to market will be potentially devastating on the business case for its use.

This is important because what constitutes technology that needs to be governed by MHRA and NICE can be expected to change and become more encompassing over the next couple of years: when medical device legislation begins to cover all but a few areas of health and social care.

The cost of compliance

In synopsis. If the AI has an impact on the outcomes of health and social care, it is most likely to fall into one of the NICE DHT categories. The burden of evidence in each category increases exponentially as you move from Tier 1 to Tier 3. Tier 1 products are self-certified. In practice unless you already have a CE mark for Tier 2+ technologies, then AI technology needs to provide evidence to what is known as a notarised body. This notarising body will indicate if the AI complies with the evidence required. The overarching body in the United Kingdom that manages the demand for the provision of evidence is the MHRA.

In 2020, the cost of compliance associated with providing this evidence ranges from approximately £0.5m for a Tier 1 product, to £2-5 million for a tier 2 and 3 products. For a Tier 1 products the timescale to evidence compliance can be between six and 12 months, the timescales to evidence of compliance for a Tier 2 or 3 product can be between two and five years.

The cost of compliance is an important subject for all staff and health and social care. This is not because they incur such costs. The primary reason is that suppliers of AI enabled technologies need to show evidence of compliance. The time and effort of health and social care staff in the creation / collation of evidence needs to be considered when developing or accepting a business case.

Reducing the cost of compliance

In academia, the technical time and cost associated with developing AI for just one part of a pathway can be three years and £0.5- 1 million. The cost of testing, validating and supplying technology often falls to the supply chain nearest to end users of the research. This is often too late. Many research and development programs are instigated without involvement of end users and safety officers from the onset. A common pitfall is to apply for funding, or be given funding, to produce a proof of concept without addressing the technical and clinical risks associated with the potential implementation of AI from the onset. If research is to be realised as developed product gathering compliance data from the onset helpful to researchers and the clients they seek to support.

On occasions when the deployment of a tier 2+ AI is expected it is most likely that formal research will be required. This often takes the place of local trials. To ensure that the success and failure of such trials can be effectively reported it is worthwhile registering trials with IRAS and HRA. Registrations are a requirement of academic research applications and coverage within publications within the health and social care space.

When to start evidence gathering for MHRA

A challenge at this time is the paucity of authorised bodies able to independently show compliance against medical device legislation. Suppliers of AI technology can expect to wait over a year to gain access to a notarised body capable of confirming compliance. If a supplier of AI technology waits till such a body is available to begin gathering evidence of appropriateness, the time it takes to bring technology to market will be potentially devastating on the business case for its use.

This is important because what constitutes technology that needs to be governed by MHRA and NICE can be expected to change and become more encompassing over the next couple of years: when medical device legislation begins to cover all but a few areas of health and social care.

The cost of compliance

In synopsis. If the AI has an impact on the outcomes of health and social care, it is most likely to fall into one of the NICE DHT categories. The burden of evidence in each category increases exponentially as you move from Tier 1 to Tier 3. Tier 1 products are self-certified. In practice unless you already have a CE mark for Tier 2+ technologies, then AI technology needs to provide evidence to what is known as a notarised body. This notarising body will indicate if the AI complies with the evidence required. The overarching body in the United Kingdom that manages the demand for the provision of evidence is the MHRA.

In 2020, the cost of compliance associated with providing this evidence ranges from approximately £0.5m for a Tier 1 product, to £2-5 million for a tier 2 and 3 products. For a Tier 1 products the timescale to evidence compliance can be between six and 12 months, the timescales to evidence of compliance for a Tier 2 or 3 product can be between two and five years.

The cost of compliance is an important subject for all staff and health and social care. This is not because they incur such costs. The primary reason is that suppliers of AI enabled technologies need to show evidence of compliance. The time and effort of health and social care staff in the creation / collation of evidence needs to be considered when developing or accepting a business case.

Reducing the cost of compliance