CarefulAI created AutoDeclare in response to the Thomson Reuters Cost of Compliance Research in 2021. Calls to action arising from the research were the need for

With AutoDeclare you can automatically triage your ability to declare compliance to standards in minutes, rather than months.

The aim of AutoDeclare is to optimise human and financial capital spent on compliance. AutoDeclare, can provide operational savings throughout a business that needs to comply to standards. It is priced at the cost of the standard you wish to comply with. This gives you 72 hours access to AutoDeclare: more than enough time understand the challenges you face when considering the cost of compliance.

When using AutoDeclare it is important to remember, it is based on an AI model. Consequently, it is not perfect, but neither are humans. You can expect it to be 92.87% accurate in its interpretation of compliance.

The AutoDeclare triage process is best shown by example. The example below is taken from the healthcare sector.

The aim of AutoDeclare is to optimise human and financial capital spent on compliance. AutoDeclare, can provide operational savings throughout a business that needs to comply to standards. It is priced at the cost of the standard you wish to comply with. This gives you 72 hours access to AutoDeclare: more than enough time understand the challenges you face when considering the cost of compliance.

When using AutoDeclare it is important to remember, it is based on an AI model. Consequently, it is not perfect, but neither are humans. You can expect it to be 92.87% accurate in its interpretation of compliance.

The AutoDeclare triage process is best shown by example. The example below is taken from the healthcare sector.

Book a Demo Today

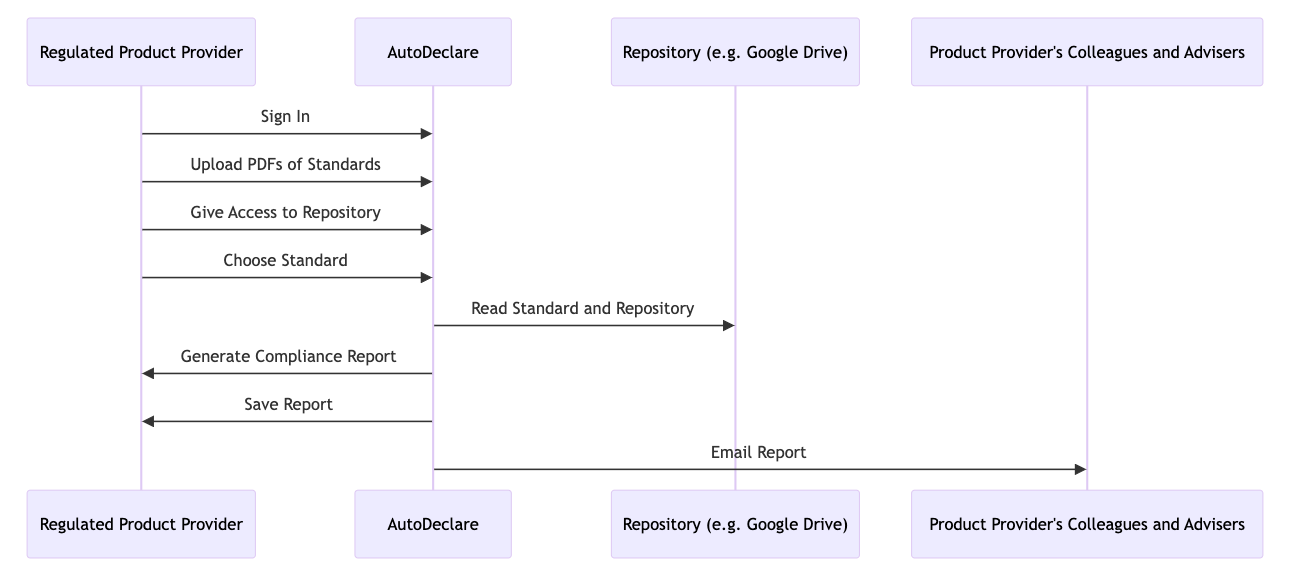

The AutoDeclare Process

AutoDeclare's data flows

The user experience is as follows:

- The AutoDeclare user uploads their evidence

- Typically evidence consists of Standard Operating Procedures (e.g. a pdf) relating to the standard they wish to comply with

- Once the user presses upload AutoDeclare converts the docs to text

- When the user presses Submit AutoDeclare reads the evidence and compares it each clause in the chosen standard

- It then returns a 'Yes' if there is evidence, and what that evidence is, or states if 'No' if evidence does nor exist

- AutoDeclare does this per clause of the chosen standard, and calculates a level of compliance

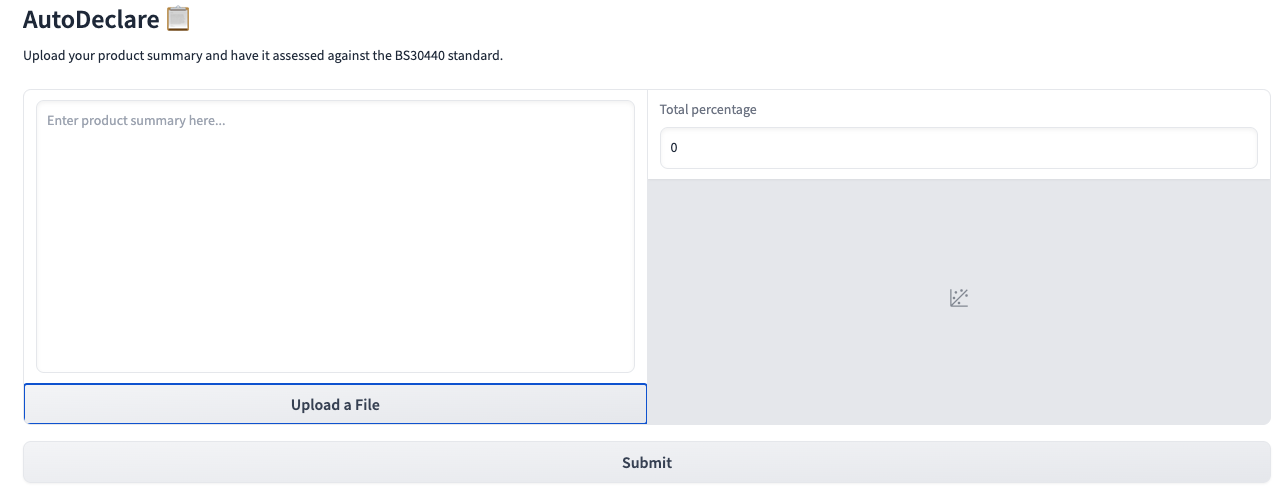

Below are some screen shots of AutoDeclare's use with BS 30440: the standard for the validation of AI in healthcare

This is the evidence upload screen...

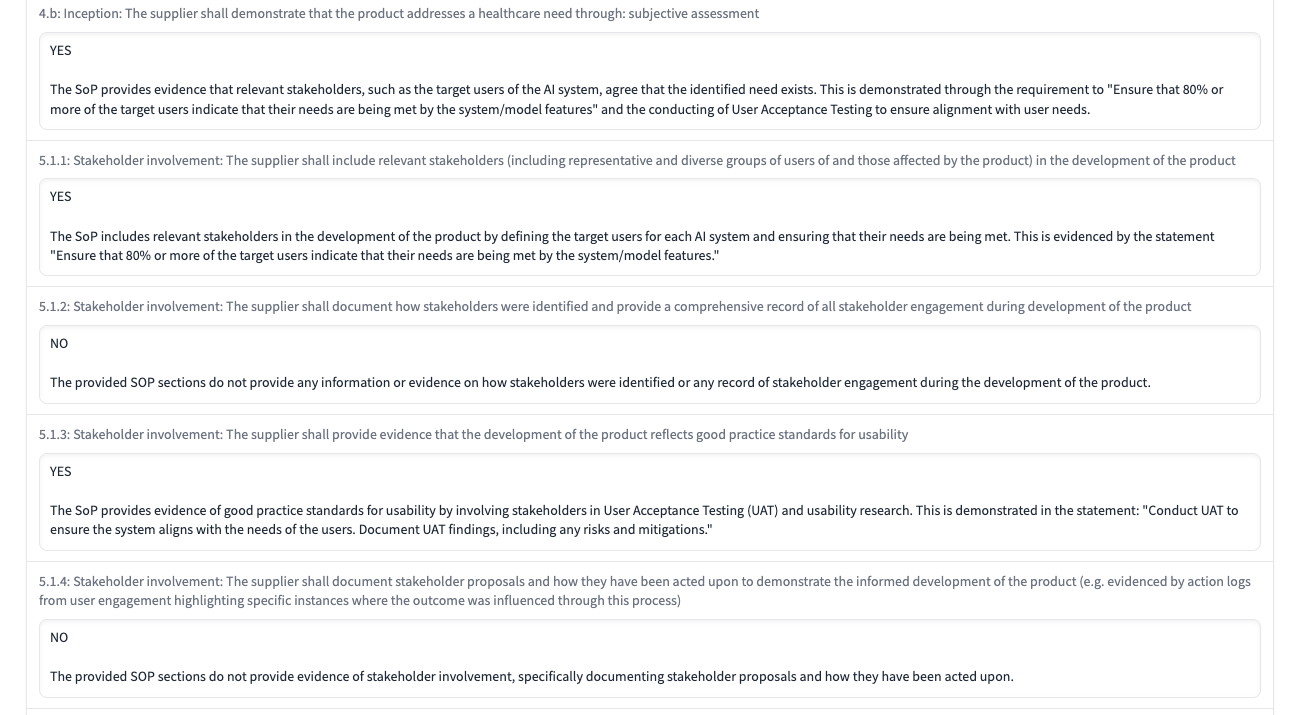

The typical output per clause is shown below...

For a standard with 30 sections the SOP compliance review process takes 5 minutes.

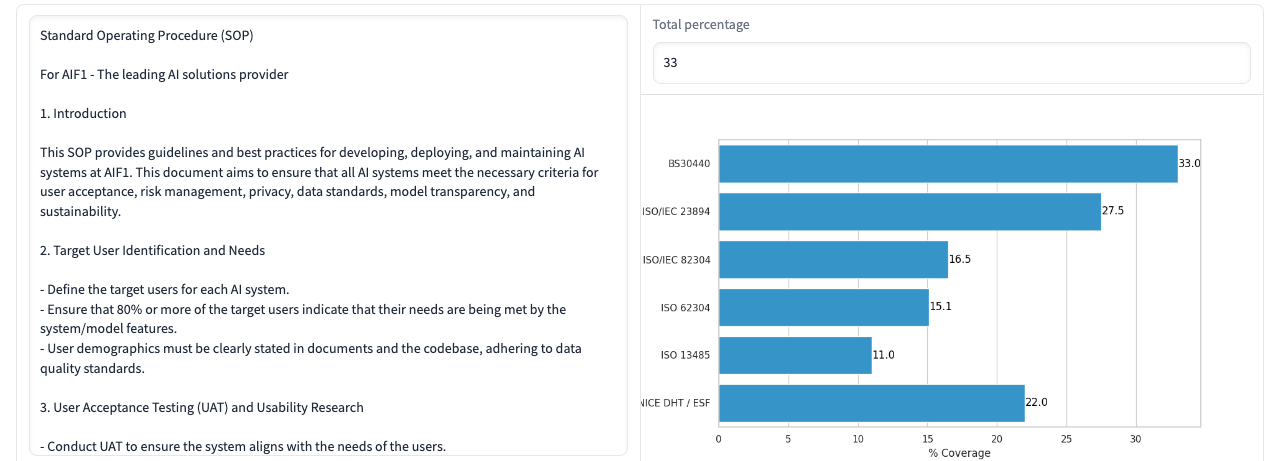

The user is also given indication of the size of the task to achieve compliance to the standard, and related standards.

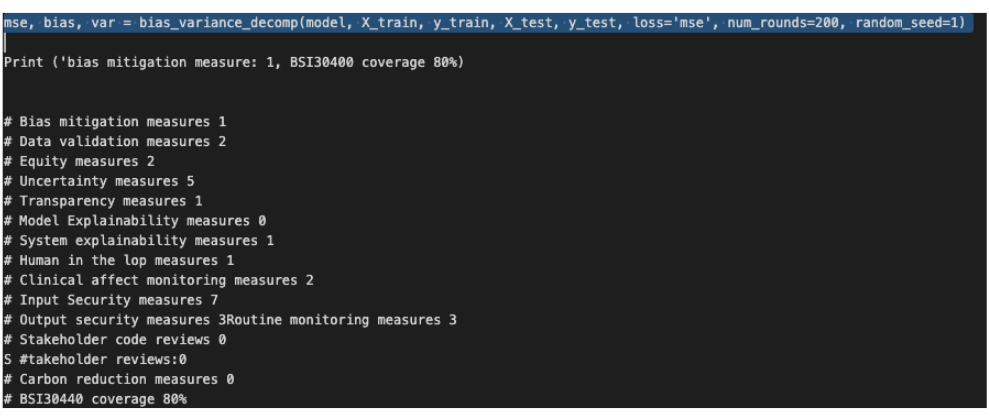

AutoDeclare Code

Below is an example AutoDeclare's annotation of a suppliers Code, within their code editor.

In the case shown, it is an audit of compliance to BS 30440: the standard for the validation of AI in healthcare

In the case shown, it is an audit of compliance to BS 30440: the standard for the validation of AI in healthcare

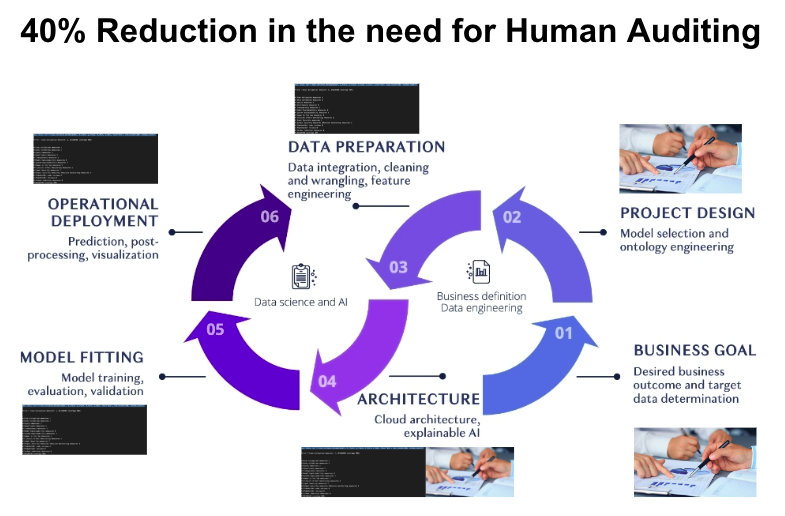

For AI systems and Software, the areas where savings are made is shown below

Download a standard today and let AutoDeclare help you focus your compliance efforts

Standards providers include: